此篇文章介紹 Docker Bridge Network 及其運作方式

環境介紹

以下的測試將會在以下環境進行:

OS:

Ubuntu 18.04Docker:

18.03.1-ce

檢視目前 Docker Network 狀態

以下是在 Ubuntu 18.04 上剛安裝好 docker 18.03.1 後的 docker network 狀態:

1 | $ docker network ls |

從上面可以看出,剛安裝好 docker 的 Linux 主機,會預設帶有 3 個 driver,分別就是 bridge、host 以及 null。

接著以下將會仔細的介紹一下 bridge network 的運作方式。

Overview

先來個 Docker Bridge Network 的觀念釋疑:

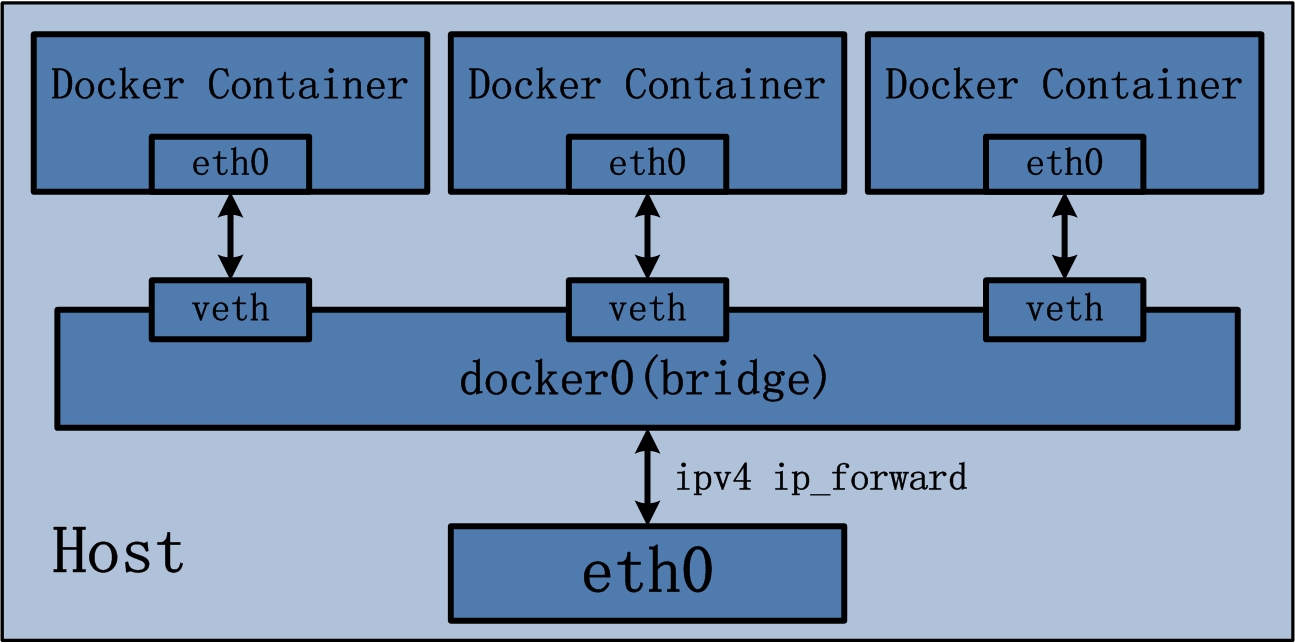

bridge network 其實是利用的是 Docker Host 上的 software bridge 來達成讓 container 可以連外的目的,並透過此 bridge 可以讓同一個 bridge 的 container 之間相互進行通訊;而且 docker bridge driver 會自動在 Host 上設定相對應的 rule(iptables, network namespace),讓 container 的網路可以正確的被使用。

bridge network 是處理在單一 docker daemon 上運行的 container 之間的相互通訊,若是要處理多個 docker daemon 上的 container 通訊,則必須要使用 overlay network

下圖是 bridge network 的架構

使用預設的 Bridge Network (docker0)

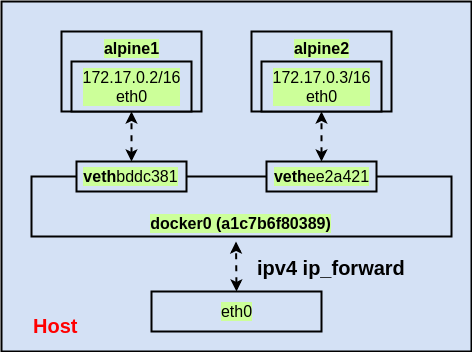

安裝好 docker 後,其實 docker 就為我們準備好了一個 bridge docker0 用來當作 container 的對外 software bridge,以下的範例會先使用 docker0 來做示範。

建立 container

首先先建立所需要的 container 並檢視網路狀態:

1 | # 啟動 alpine linux container 1 (alpine1) |

而目前的網路架構變成如下:

測試 container 網路

了解上面的網路架構之後,接著來測試 container 網路:

1 | # 檢視 container IP |

從上面的測試可以看出,其實兩個 container 由於使用相同的 bridge(docker0) 因此 IP 可以互通,但使用 domain name 卻無法,表示互相不認識對方。

使用自訂的 Bridge Network

自訂 docker network

這次同樣是使用 bridge driver,但透過 docker network create 指令建立一個自訂名稱的 docker network,這邊取名為 alpine-net:

1 | $ docker network create --driver bridge alpine-net |

從上面的結果看的出來,新建立的 docker network(alpine-net) 會被分配使用另一個不同的網段,與原先的 docker0 使用的並不相同。

建立 container

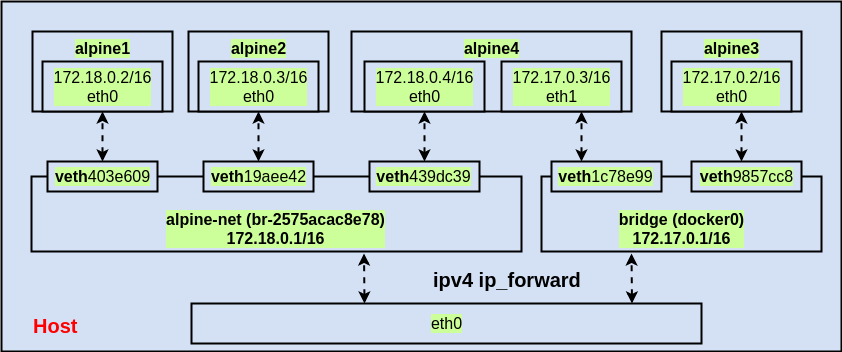

這裡一共要建立4個 container,分別是 alpine1、alpine2、alpine3、alpine4,並進行以下的網路設定:

| Container | Docker Network |

|---|---|

| alpine1 | alpine-net |

| alpine2 | alpine-net |

| alpine3 | docker0 |

| alpine4 | alpine-net + docker0 |

並透過以下指令來完成:

1 | # 啟用 container,使用 alpine-net |

接著確認一下相關的網路設定是不是都有被正確建立:

1 | # 檢視 veth device |

由於 alpine4 特別與兩個 network 連接,因此來檢視一下 alpine4 的網路狀況:

1 | # 從下面的輸出可以看出,由於 alpine4 接到了兩個 software bridge,因此會有兩張網卡顯示出來 |

而目前的網路架構變成如下圖:

測試 container 網路

最後要來測試一下 container 之間的連通性:

1 | # 測試與 alpine2 通訊 |

從上面的測試可以得出以下結論:

在自訂的 docker network 中,container 可以透過 domain name 的方式與其他在同一個 docker network 下的 container 通訊

不同 docker network 之間的 container 網路是隔離無法相互通訊的,不論是透過 domain name or IP

第一個結論是因為 docker 對於自訂的 docker network 會提供 automatic service discovery 的功能,讓 container 之間可以透過 name 來與對方通訊

另外,若是在同時與 docker0 與 alpine-net 兩個 software bridge 相接的 alpine4 container 上執行測試會是什麼結果呢?

1 | # 可透過 name 與 alpine-net bridge 下的 container(alpine1) 通訊 |

從 alpine4 上的測試可以發現以下結論:

在

docker0bridge 下的 container,都無法透過 domain name 相互溝通承上,使用 IP 通訊是沒問題的

有了以下測試結果,若要使用 domain name 處理 container 之間的通訊時,就記得必須建立一個新的 docker network 來處理才可以正常通訊。